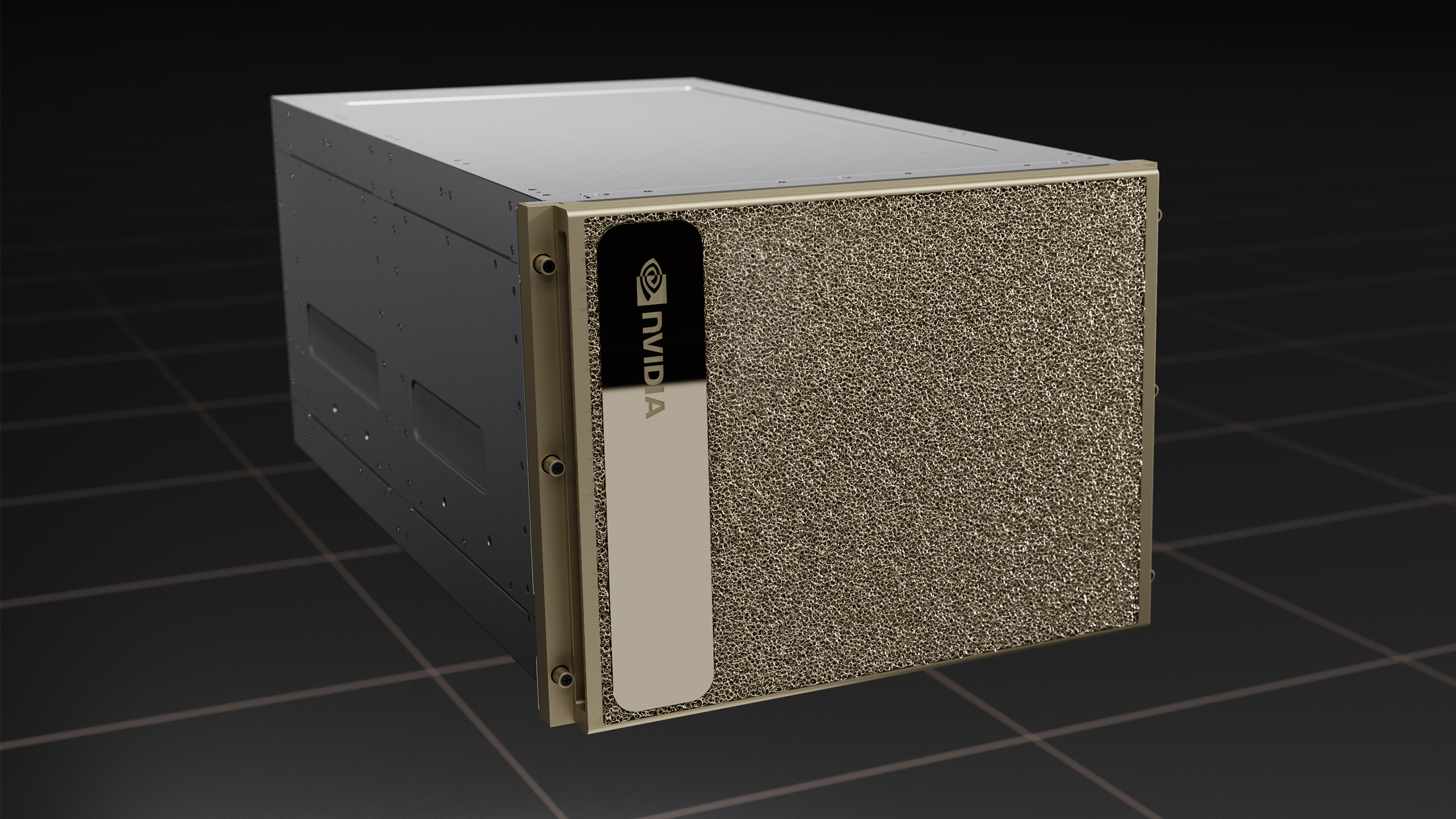

H100 Data Sheet

H100 Data Sheet - It also explains the technological. Nvidia h100 tensor core gpu securely accelerates workloads from enterprise to exascale hpc and trillion parameter ai. The nvidia® h100 nvl tensor core gpu is the most optimized platform for llm inferences with its high compute density, high memory. This can be used to partition the gpu into as many as seven. This datasheet details the performance and product specifications of the nvidia h100 tensor core gpu.

This can be used to partition the gpu into as many as seven. The nvidia® h100 nvl tensor core gpu is the most optimized platform for llm inferences with its high compute density, high memory. This datasheet details the performance and product specifications of the nvidia h100 tensor core gpu. Nvidia h100 tensor core gpu securely accelerates workloads from enterprise to exascale hpc and trillion parameter ai. It also explains the technological.

The nvidia® h100 nvl tensor core gpu is the most optimized platform for llm inferences with its high compute density, high memory. This datasheet details the performance and product specifications of the nvidia h100 tensor core gpu. It also explains the technological. Nvidia h100 tensor core gpu securely accelerates workloads from enterprise to exascale hpc and trillion parameter ai. This can be used to partition the gpu into as many as seven.

今年英伟达H100 GPU都流向了哪?微软和Meta是最大两个买家_《财经》客户端

The nvidia® h100 nvl tensor core gpu is the most optimized platform for llm inferences with its high compute density, high memory. Nvidia h100 tensor core gpu securely accelerates workloads from enterprise to exascale hpc and trillion parameter ai. This datasheet details the performance and product specifications of the nvidia h100 tensor core gpu. It also explains the technological. This.

Dgx H100 Spec Sheet edu.svet.gob.gt

The nvidia® h100 nvl tensor core gpu is the most optimized platform for llm inferences with its high compute density, high memory. Nvidia h100 tensor core gpu securely accelerates workloads from enterprise to exascale hpc and trillion parameter ai. It also explains the technological. This can be used to partition the gpu into as many as seven. This datasheet details.

NVIDIA Hopper H100 GPU Is Even More Powerful In Latest Specifications

It also explains the technological. Nvidia h100 tensor core gpu securely accelerates workloads from enterprise to exascale hpc and trillion parameter ai. This datasheet details the performance and product specifications of the nvidia h100 tensor core gpu. The nvidia® h100 nvl tensor core gpu is the most optimized platform for llm inferences with its high compute density, high memory. This.

Aixia’s Data Center, based in Gothenburg, is set to one of the

It also explains the technological. This datasheet details the performance and product specifications of the nvidia h100 tensor core gpu. The nvidia® h100 nvl tensor core gpu is the most optimized platform for llm inferences with its high compute density, high memory. Nvidia h100 tensor core gpu securely accelerates workloads from enterprise to exascale hpc and trillion parameter ai. This.

H100 Datasheet Specifications, Features, and More

It also explains the technological. The nvidia® h100 nvl tensor core gpu is the most optimized platform for llm inferences with its high compute density, high memory. Nvidia h100 tensor core gpu securely accelerates workloads from enterprise to exascale hpc and trillion parameter ai. This datasheet details the performance and product specifications of the nvidia h100 tensor core gpu. This.

Nvidia Claims to Double LLM Inference Performance on H100 With New

This datasheet details the performance and product specifications of the nvidia h100 tensor core gpu. The nvidia® h100 nvl tensor core gpu is the most optimized platform for llm inferences with its high compute density, high memory. Nvidia h100 tensor core gpu securely accelerates workloads from enterprise to exascale hpc and trillion parameter ai. It also explains the technological. This.

H100 Transmitters Process Analytics Hamilton Company

The nvidia® h100 nvl tensor core gpu is the most optimized platform for llm inferences with its high compute density, high memory. This datasheet details the performance and product specifications of the nvidia h100 tensor core gpu. It also explains the technological. This can be used to partition the gpu into as many as seven. Nvidia h100 tensor core gpu.

(PDF) SAFETY DATA SHEET H100

Nvidia h100 tensor core gpu securely accelerates workloads from enterprise to exascale hpc and trillion parameter ai. This can be used to partition the gpu into as many as seven. It also explains the technological. The nvidia® h100 nvl tensor core gpu is the most optimized platform for llm inferences with its high compute density, high memory. This datasheet details.

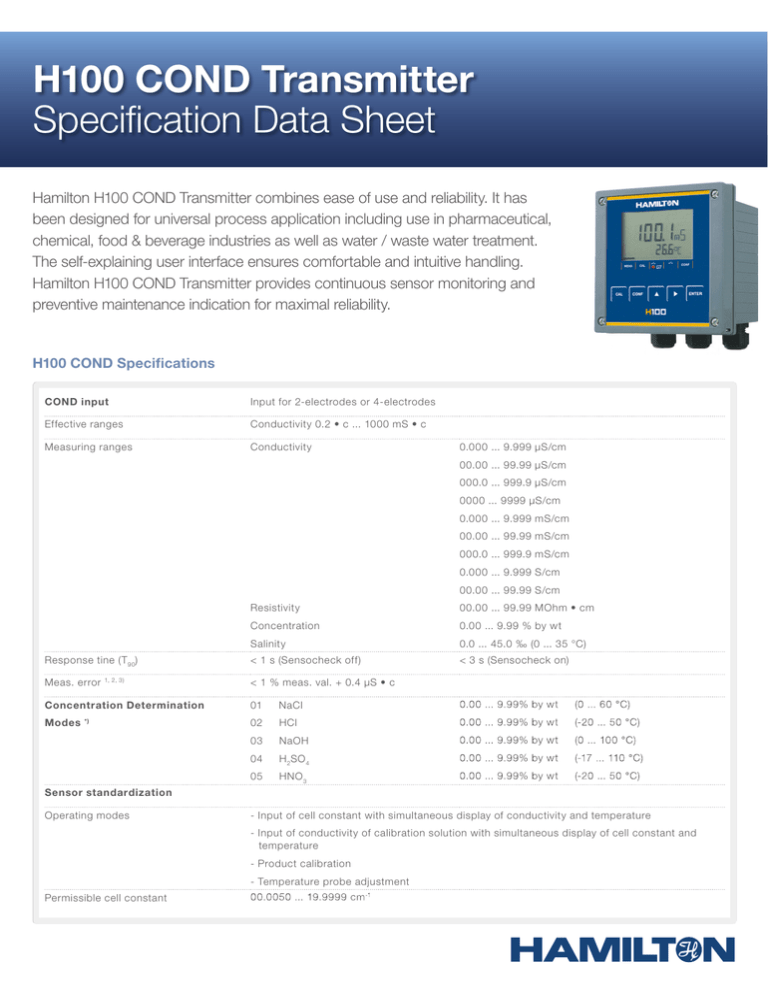

H100 COND Transmitter Specification Data Sheet

The nvidia® h100 nvl tensor core gpu is the most optimized platform for llm inferences with its high compute density, high memory. It also explains the technological. This can be used to partition the gpu into as many as seven. Nvidia h100 tensor core gpu securely accelerates workloads from enterprise to exascale hpc and trillion parameter ai. This datasheet details.

NVIDIA H100 80GB PCIe 900210100000000 OpenZeka NVIDIA Embedded

Nvidia h100 tensor core gpu securely accelerates workloads from enterprise to exascale hpc and trillion parameter ai. It also explains the technological. The nvidia® h100 nvl tensor core gpu is the most optimized platform for llm inferences with its high compute density, high memory. This datasheet details the performance and product specifications of the nvidia h100 tensor core gpu. This.

The Nvidia® H100 Nvl Tensor Core Gpu Is The Most Optimized Platform For Llm Inferences With Its High Compute Density, High Memory.

This datasheet details the performance and product specifications of the nvidia h100 tensor core gpu. It also explains the technological. This can be used to partition the gpu into as many as seven. Nvidia h100 tensor core gpu securely accelerates workloads from enterprise to exascale hpc and trillion parameter ai.